OpenBench

A Universal Land Surface Model Evaluation System

The Fundamental Principles and Architectural Blueprint of OpenBench

Land Surface Models (LSMs), as the critical link connecting various Earth system spheres (atmosphere, ocean, land), have experienced rapid development in complexity and resolution over recent decades. Models have evolved from simple “bucket” models to complex multi-module systems incorporating biogeochemical processes, geophysical processes, and even human activities, with spatial resolution improving from tens of kilometers to kilometer or even sub-kilometer scales. This dramatic increase in complexity poses unprecedented demands on model evaluation and validation tools. However, existing evaluation frameworks are gradually revealing their inherent limitations when dealing with new-generation LSMs.

These limitations manifest at multiple levels. First, many evaluation tools are custom-developed for specific models, such as AMBER for the CLASSIC model and benchcab for the CABLE model, requiring researchers to invest significant time learning specific usage methods and data formats when trying new models, greatly limiting cross-model comparisons and the pace of scientific progress. Second, while some general platforms like the International Land Model Benchmarking (ILAMB) project are powerful, they require converting model outputs to strict CMIP (Coupled Model Intercomparison Project) standards—a process that is not only time-consuming but may also introduce errors, affecting evaluation reliability.

However, a more fundamental gap is that almost all existing evaluation systems fail to comprehensively and systematically evaluate the impact of human activities on land surface processes. In the context of the Anthropocene, human activities such as agricultural irrigation, urbanization, and reservoir management have become dominant drivers of surface processes. The data for these activities are typically small-scale, diverse in sources, and highly uncertain, making their effective evaluation one of the core challenges facing current LSM development.

Modular, High-Performance, and Accessible Design Architecture

To address these challenges, OpenBench is designed as an open-source, cross-platform, high-performance universal LSM evaluation system. Its architectural choices reflect careful consideration of future adaptability, community collaboration, and computational efficiency, not merely technical implementation convenience.

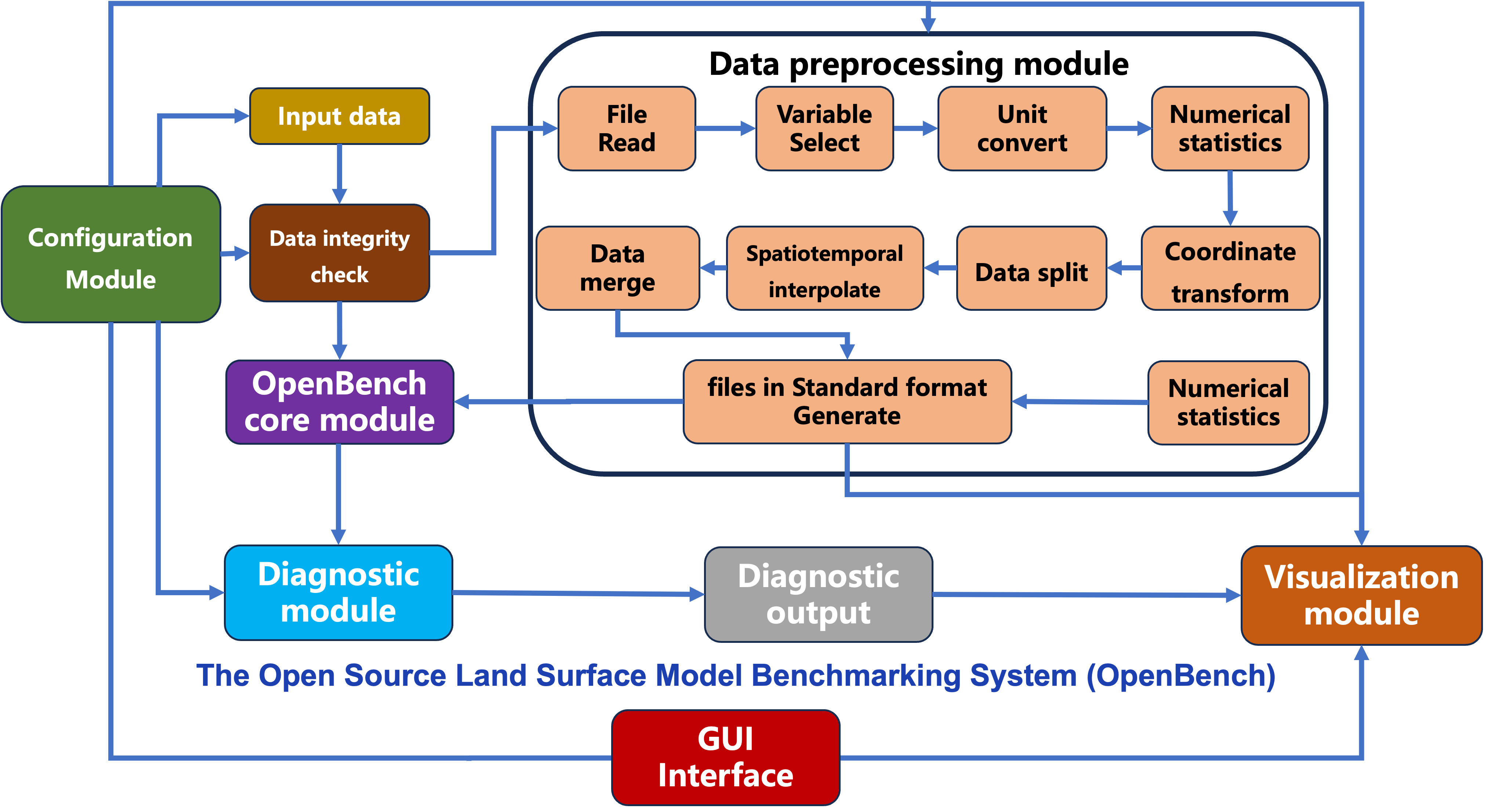

OpenBench’s system architecture is based on six core modular components, a design that is the cornerstone of its flexibility and extensibility:

-

Configuration Management Module: Supports three configuration formats—YAML, JSON, and Fortran namelist—allowing users to define evaluation parameters, data sources, and model outputs in familiar ways, greatly simplifying evaluation scenario customization.

-

Data Processing Module: Responsible for preprocessing reference data and model simulation data, including spatiotemporal resampling, coordinate transformation, unit conversion, etc., ensuring that data from different sources and resolutions are compared under unified standards.

-

Evaluation Module: Implements core evaluation logic, applying extensive evaluation metrics and scoring systems to quantify model performance. This module supports evaluation of both site data and gridded data, automatically adjusting methods based on data type.

-

Comparison Processing Module: Supports comparative analysis of multiple models, multiple parameterization schemes, and multiple scenarios, key to understanding inter-model differences and uncertainties.

-

Statistical Analysis Module: Integrates advanced statistical techniques, providing deeper insights into model behavior and performance patterns.

-

Visualization Module: Capable of generating high-quality, customizable charts for intuitively interpreting and communicating complex evaluation results.

To handle the computational pressure from increasing data volumes and high-resolution simulations, OpenBench is designed to fully leverage parallel processing technology. It cleverly combines two mature Python libraries: for I/O-intensive tasks involving numerous independent file read/write operations in site evaluation, the system uses the Joblib library for efficient task distribution and parallel processing; for processing large-scale gridded data, it employs Dask library’s lazy execution and chunked array processing mechanisms, ensuring extremely high processing speed while effectively managing memory.

Multi-Dimensional Evaluation and Comparison Methodology

To avoid one-sided conclusions from relying on single “goodness of fit” metrics, OpenBench adopts a multi-metric evaluation strategy aimed at providing comprehensive, detailed examination of model performance from different dimensions. The system’s integrated metric library is extensive and can be systematically categorized by evaluation focus:

-

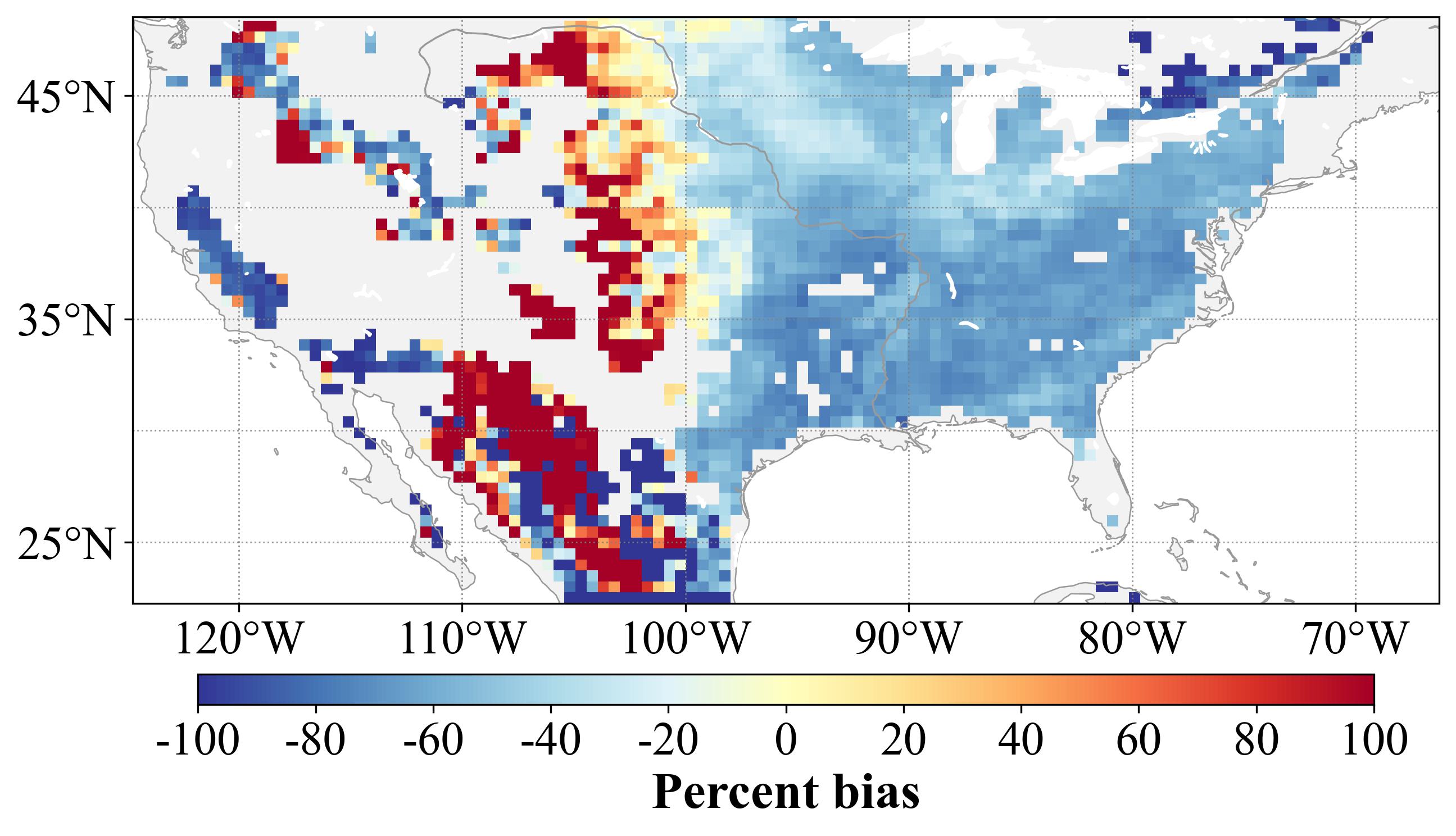

Bias Metrics: Used to quantify systematic biases between model outputs and observations. Includes basic bias (BIAS) and percent bias (PBIAS), as well as metrics for specific flow scenarios such as annual peak flow percent bias (APFB) and low flow percent bias (PBIAS_LF).

-

Error Metrics: Used to measure the absolute magnitude of model prediction errors. Core metrics include root mean square error (RMSE) and its unbiased version (ubRMSE), as well as mean absolute error (MAE) which is insensitive to outliers.

-

Correlation Metrics: Used to evaluate the model’s ability to capture trends in observational data. Includes classical Pearson correlation coefficient (R) and coefficient of determination (R2), as well as Spearman rank correlation coefficient (rSpearman) measuring monotonic relationships.

-

Efficiency Metrics: Compare model performance with a baseline (typically the observational mean), providing a relative performance measure. The most famous are Nash-Sutcliffe efficiency (NSE) and Kling-Gupta efficiency (KGE), with the latter further decomposed into correlation, bias, and variability components, providing more diagnostic information.

-

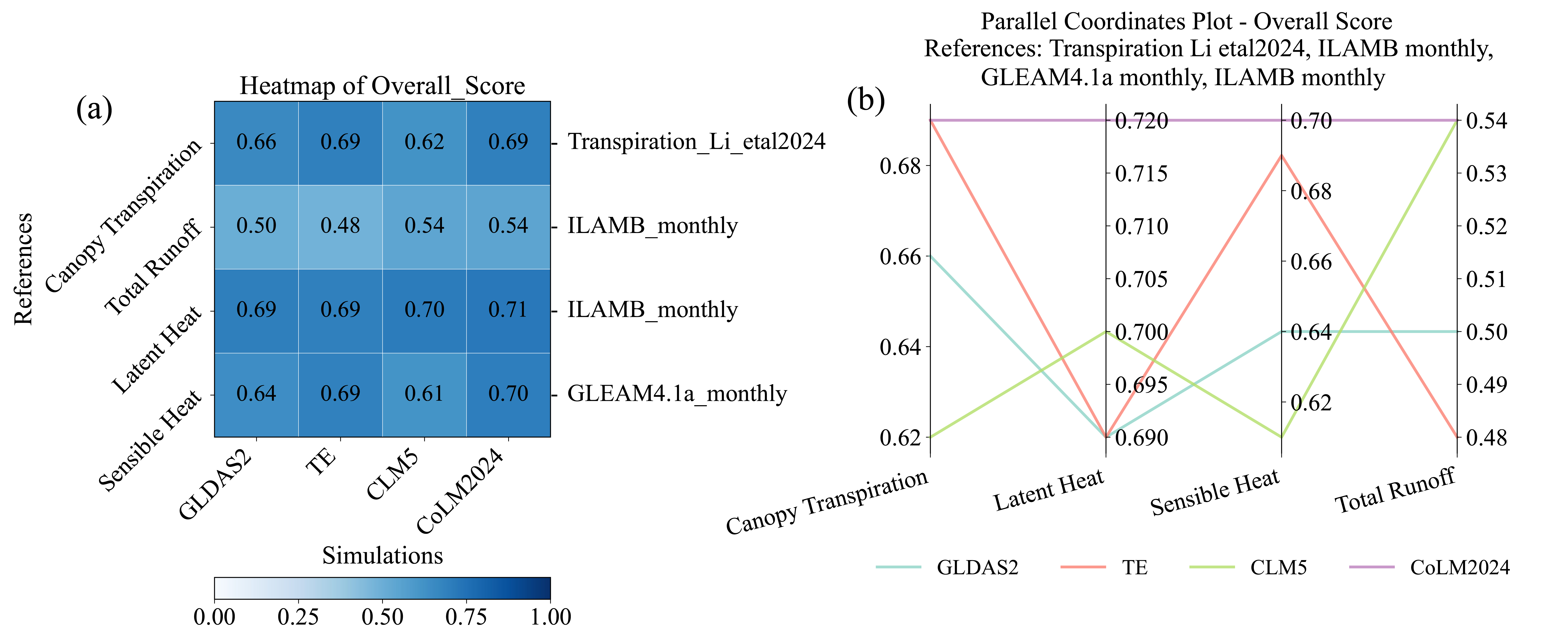

ILAMB Scoring System: Building on multi-metric evaluation, to facilitate cross-model comparisons and comprehensive result presentation, OpenBench adopts a standardized scoring index system derived from the ILAMB framework. These indices normalize different evaluation metric values to a 0-1 interval, where 1 represents perfect agreement between model and observations. While OpenBench borrows ILAMB’s scoring system, it makes two key adjustments with philosophical significance in specific implementation. The first key difference lies in the calculation of global average scores. Unlike ILAMB’s mandatory mass-weighted method, OpenBench gives users the power to choose. Users can flexibly select area-weighted (ensuring equal contribution from geographic areas), mass-weighted (consistent with ILAMB), or unweighted (simple spatial average) based on their research questions. The second, more fundamental difference lies in the treatment of multiple reference datasets. OpenBench takes a completely different path: it allows users to select one or more reference datasets they consider reliable, then independently reports model evaluation results relative to each reference dataset without any form of fusion or weighting.

New Frontiers in Land Surface Modeling: Evaluating Anthropogenic Impacts

Unlike previous evaluation systems that treat human activities as secondary factors or ignore them entirely, OpenBench makes systematic evaluation of human activity impacts its core design principle and fundamental objective. This is not an add-on feature but the starting point of the project conception. To achieve this goal, the system integrates a large-scale, diverse collection of benchmark datasets carefully selected specifically for evaluating various anthropogenic processes.

These datasets can be divided into three major categories, comprehensively covering the main aspects of human activity impacts on terrestrial surface systems:

-

Agricultural Systems: To evaluate the impact of agricultural activities on water, energy, and carbon cycles, OpenBench integrates datasets on crop yields (e.g., GDHY, SPAM), agricultural water use (e.g., GIWUED), crop phenology, planting area, and productivity. These data enable validation of agricultural management (e.g., irrigation, fertilization) and crop growth processes simulated in models.

-

Urban Environments: Urbanization represents one of the most dramatic alterations to the surface. OpenBench evaluates urban physical effects by integrating datasets on urban extent (e.g., UEHNL), surface temperature, albedo, and others. Critically, it systematically introduces multiple Anthropogenic Heat Flux (AHF) datasets (e.g., AH4GUC, DONG_AHE) for the first time, enabling direct evaluation of the urban heat island effect, a core issue.

-

Water Resource Management: Humans have profoundly altered the global hydrological cycle through reservoir construction, inter-basin water transfers, and other engineering projects. OpenBench integrates observational data on reservoir operations (e.g., ResOpsUS), river discharge (e.g., GRDC), and large-scale inundation areas (e.g., GIEMS_v2) to evaluate models’ hydrological simulation capabilities in human-managed watersheds.

Open Research Topics

As an open and extensible research platform, OpenBench offers rich research opportunities for graduate students and collaborators. The following are our current research focuses:

We welcome graduate students and collaborators interested in any of the above topics to contact us and jointly advance the development of land surface modeling science!

Selected Related Publications (# indicates corresponding author):

-

Wei, Z.#, Xu, Q., Bai, F., Xu, X., Wei, Z., Dong, W., Liang, H., Wei, N., Lu, X., Li, L., et al. (2025). OpenBench: a land models evaluation system. Geoscientific Model Development, 2025, 1-37.

-

More related research results will be published successively…